Security is Not an AI Problem

In this post I cover:

Despite the hype right now, AI does not solve security problems well

The areas where AI does well and where it struggles; security problems overlap with those where it struggles

How Generative AI specifically is useful in some cases but with significant cons for most security use cases

Security is Not an AI Problem

If you follow any tech or security journalism right now, you’re getting a pretty consistent message: AI is the future. We see every month the crazy progress of large language models (LLMs) like ChatGPT / GPT-4, Bard, Gemini, DALL-E, LLaMA, and Stable Diffusion. Self-driving seems like it's only a few years away (I'm sure it actually is this time). IBM beat humans at Jeopardy years ago, and the machine's triumph over humanity continues along a John Henry-esque trajectory. A lot of smart people keep mentioning the scariness of the singularity.

As a good security practitioner, you should investigate this new magic. There's so much data you're already collecting in all your logs; you have the sensors there, but you just don't have the budget or capacity to have enough people look through it all. This sort of thing is tailor-made for AI. AI is the thing that will let us get a hang of detection and response. Maybe we can finally enjoy a holiday weekend in peace?

Unfortunately, it’s mostly crap.

I complain a lot about snake oil salesmen. Right now, many of these snake oil salesmen tend to be AI vendors. This is a problem throughout tech, but especially a problem in the security space. Most of the legitimate uses of AI are not applicable to the security problems we face. This was disappointing to me, since I got a data science Masters solely to move into the data-driven security space before realizing the value just isn't there

Note: I've written AI four times already, and I'm already gagging from the marketing speak of it, so I'm switching to machine learning (ML) as the term for the rest of this post.

Where ML Falls Flat

To clarify: by ML in this context I mean tools, products, and vendors where ML is the central method to solve a significant security problem. Using anomaly detection to "replace your SIEM", "never write a rule again", "slash your false positives", "only give you critical alerts", and "turbocharge your response times", that kind of thing. Systems that put ML as the primary basis for your protection, and not something at the margins. Adding NLP to phishing prevention makes sense; it's not perfect, but it's not your primary defense, and I’m not addressing those kinds of use cases here.

Unkind Environments

Advances in machine learning, especially in deep neural networks and generative AI, have had some amazing output. It can close caption our voices at near-instance speed with crazy accuracy. It can write poetry and create art. It can beat us in Starcraft.

These are all wondrous advancements, but they operate in kind learning environments. Kind learning environments are ones where the rules are clearly defined and consistent, the feedback is immediate, and the model’s training data doesn’t become obsolete very often. It helps that a few mistakes aren't a big deal in basically every area in which ML has taken off.

Sadly, ML does pretty poorly in unkind environments. Teslas drive into lane separators and emergency vehicles. IBM's health AI push with Watson failed miserably. DeepMind's continued and depressingly successful attempts to crush us in childhood games are vulnerable to just dropping troops in a way the AI hadn't seen before. Even the much-hyped ChatGPT is widely panned for its constant inaccurate mansplaining (model-splaining?)

Security is a really fucking unkind learning environment. The rules are not clearly defined, and change constantly, both by action of the blue teams and the attackers. The feedback from an event can take hours, days, or even years to manifest. The models themselves can be attacked. False positives can overwhelm your teams, and false negatives lead to compromise. These are problems we've been dealing with in the space for decades, but it's crippling for ML systems. If DALL-E uses Paddington instead of Winnie in your generated image of a British bear eating honey, that's a laugh. If your car thinks the wall in front of you is a 75 MPH speed sign, you die. The latter problem is harder than the former, and the wall isn't trying to intentionally change its appearance to seem less like a wall to ruin your model’s predictions.

ML Works Best on Semi-Noisy Data

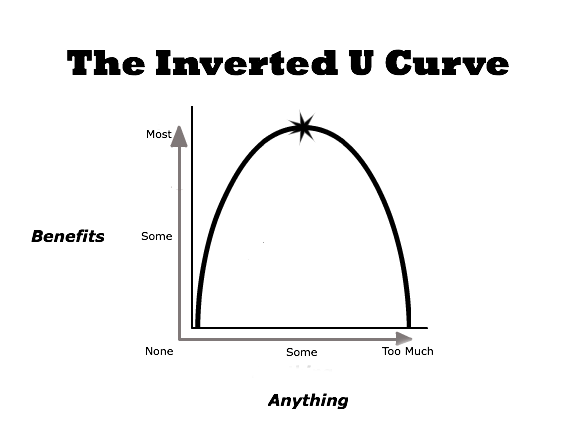

ML effectiveness follows an inverted U-shaped curve:

An inverted U-shaped curve implies that something is great in the middle of a range of states but bad at the extremes. Like many vitamins, where consuming either too few or too many will kill you. ML is the same way with signal and noise in the data. If the data has high enough signal, you don't need ML, procedural rules work great. If you're building a red color detector, and you see the wavelength of red, it's red. You don't need a 10 billion parameter model to tell you that. Same if a file has a signature from Virus Total, if one of your internal web services reaches out to known command and control (C2) server IP, or if you want to tell if it's currently raining.

At the other end, ML doesn't work well if there is too low a signal. If you're trying to detect a subtle event that happens only once out of 1,000,000,000 requests, you won't get enough data to train a model that's more useful than just writing a procedural rule.

If the data is the right level of noise to signal, ML is a wonderful solution. Security problems, unfortunately, exist mostly at the extremes.

Procedural Rules and Good Configuration Get You 98% of the Way There

Every AI vendor I've had the misfortune of speaking with since Log4Shell has talked about how their engine would have detected Log4Shell for me. That's nice and all; catching Log4Shell exploitation isn’t totally trivial in many people’s environments, but these vendors are not using AI to detect it. They have an endpoint agent or a proxy that looks at the request going to a random external IP and sees it’s an LDAP connection. That means it’s Log4Shell; no one is making random external LDAP requests from a Java service. There’s no model prediction here. Fancy ML black magic detecting it isn't impressive. The fact that this is still the primary thing AI vendors can think of pitching as a solution shows how useless the tech is.

This is sort of a corollary to the above, but security problems solved by ML detection tech are almost entirely solved by reasonable network rules, procedural alerts in your log system/SIEM, and liberal use of canaries. These aren't that hard to do! Do those first before worrying about ML.

The products sold as fancy ML are often just fancy-sounding procedural rules anyway. Take Lacework, a company trying to sell itself to Wiz. Running their fancy ML apparently has such a high burn rate that they may only get paid 1.5x revenue for the company but provides a set of alerts1 that look suspiciously like the set of procedural rules on the main Sigma rules repo.2

You Can't Evaluate or Understand It Easily

You shouldn't use a vendor you can't evaluate. You will find this surprisingly hard for most companies to do on ML products; this is more in the tier of a PhD research project than a quick POC/POV. Sure, you can write up quick test cases and see how various products detect them, but this is constrained by your very limited capacity, and all the toy test cases are basic and easy to detect anyway. It doesn't give you any assurance it's actually going to detect a real attack.

You can't understand or change a peddled product in real time if it's actually sophisticated behind the scenes and not just a logistic regression model claiming to be artificial intelligence. This means you can't explain it, and you can't improve it. Imagine you're asked by your CEO post-breach why DarkTrace didn't catch the attack and what can be done to fix it? And your answer has to be "I have no idea" and "idk pray"? You're going to be fired.

This makes your ML-based defenses are themselves vulnerable to attack in ways that are impossible to detect or understand. Here's a great paper on the subject, or a non-paywalled article going over the gist of it pretty well, or just read whatever blog post about prompt injection is trending at the moment. There is nothing tractable we can do about this right now. Academia and research have yet to solve this problem, while we struggle as an industry to just apply basic access controls correctly.

It's Expensive

Doing something but-with-AI has become a magical way for companies to try to increase their revenue by 2-3x. It's not log storage, but log storage with AI. It's not code analysis, but code analysis with AI. It's not zero trust, but zero trust with AI.

Besides these being applications that make little sense, these things end up being hilariously expensive relative to their value. The mythical ideal of ML-based, no-rules-needed, automated attack detection starts in the solid 6 figures for small-ish-sized companies/cloud deployments. That's insane! That's at the SMB tier, just think about how many small island nations could be paid to be security engineers for the cost of a Fortune 100 sized contract.

Note: Sometimes it's just product marketing wanting to slap ML/AI onto the pitch to try to sell, it's not actually an ML product, and it's not priced like one. That's weird, but mostly fine. Saying shit that doesn't make sense about a product, but sounds nice and trendy to out-of-touch CIOs and CISOs reading Gartner quadrangles, is basically marketing's job. We can't begrudge mostly decent products trying to sell to that ilk too. They have a lot of money.

Generative AI + LLMs

I originally started this blog post before ChatGPT’s explosion (I’ve been sitting on blog drafts for a long time, sorry), and since then, generative AI (GenAI) has been on a massive hype cycle. I think this adds to my point. GenAI has been heralded as the future of basically every technology at the time of this posting. It will streamline incident response so even the most useless analyst can triage bugs. I've seen folks asking about using ChatGPT to manage AWS configuration vulnerabilities. Orca Security is using ChatGPT to secure the cloud, because of course they are.

It's also widely panned. Model hallucinations are a regular occurrence, and can be convincing enough to trick the CISOs of major AI companies.3 Models perform in non-deterministic ways, and if you leverage managed models (like OpenAI or Bard APIs), they can change on you in ways you can’t control or predict. Humorous mistakes like this happen all the time.

I'm pretty sure the panning is more correct. You're still operating in the same unkind learning environment.

For the reading code to find vulnerabilities use cases, the models are heavily hampered by how large the problems’ search space is. 200k token context windows are great, but we need more than just attention when the cyclomatic complexity of the code you give it is in the hundreds or thousands.

It's impossible to evaluate or understand how your LLM made a decision. Its output is non-deterministic and prone to hallucinations and seasonal depression, which you can’t tolerate in many secure use cases. It works well for freeform text and pictures partially because our brains can gloss over mistakes and still get value. This does not work at all in the cold, hard, complex, math-filled security world we inhabit.

It's expensive (relatively). GPT-4 API’s cost $0.03 to $0.06 per 750 words processed (1,000 tokens), and GPT-4-turbo is 1/3rd of that price. This is fine for the occasional query, but the moment you start trying to process a significant number of builds or events, you're burning all your budget. The GPU compute may not even be available, either for you or your AI security vendor.

Just use some firewall rules, you know?

To be fair, there are several security-related things GPT APIs and LLMs can do well. Enrichment of security alerts, especially helping explain or summarize scripts, code, and incidents, seems to be genuinely useful enough of the time to be worth doing. Many companies are now using AI to help ask and/or answer security questionnaires. This is the most useful application so far, at least in my day-to-day (though maybe don’t spend too much on a vendor here, it only took a few hours and 50 lines of Python using LangChain to get an MVP). I’d put money that in about 3 years, we all create, ask, answer, and assess most security questionnaires using LLMs, leaving us basically where we started but consuming a hilarious amount of GPU compute in the process.

However, these are all, at best, moderate improvements on the work of expert humans, not something that will change our core security problems. That applies essentially to all current uses of ML. Where it works, we can streamline and improve the jobs of our experts, not replace them. That is valuable, and we should always be exploring ways to improve; it’s just not a panacea.

Also, undoing some of that value, people also think ChatGPT will improve exec's and board members' ability to understand complex topics:

The world may be able to more quickly “close the knowledge gap that exists between non-security executives and security executives” using ChatGPT, Boyce said. For instance, “if you were a CEO of a financial services company, and you wanted to understand how Russia-Ukraine was going to impact you [in terms of cybersecurity], you could be searching on Google for hours putting together your own point-of-view on this, and looking for the right questions to ask.” With ChatGPT, “you honestly may be able to do this in minutes to be able to educate yourself.”

I can't imagine anything more horrifying than trying to explain the nuances of how Russia-Ukraine affects security posture to a financial services CEO who now thinks they know how security works because they spent several minutes with ChatGPT. If that’s my future, I’d prefer to keep manually filling out questionnaires, thanks.

I'd Love To Hear From You

Do you agree? Disagree? Intensely? Do you have any other feedback for me? Please leave a comment below; I'd love to hear it!

I'm not great at self-promotion, so if you enjoyed this post, please subscribe so you can get more and farm that sweet karma by posting it on HN and various social media sites. If it filled you with a deep rage, please subscribe so you can hate-read it and hate-share it with others so others can, too.

https://web.archive.org/web/20230921160716/https://docs.lacework.net/console/introduction-to-aws-alerts for whenever the Wiz acquisition closes and their documentation site goes down

I don’t mean to pick on Lacework here. I happened to know slightly more about their product because their BDRs were very happy to give me several pairs of Airpods, a Nintendo Switch, and a Solo stove to listen to a few 20-minute product demos. I guess that customer acquisition approach didn’t help the burn rate, which is sad because now I have to spend money on my family’s birthday gifts.

I’m not trying to throw shade at Anthropic here. My point is that the output can be so convincing that it fools people who do very much know better.

Would love to hear more of your thoughts on different vendors using AI and if anything at all out there has been useful/changed since given it’s been about a year since this was posted